The 8 Tribes of AI

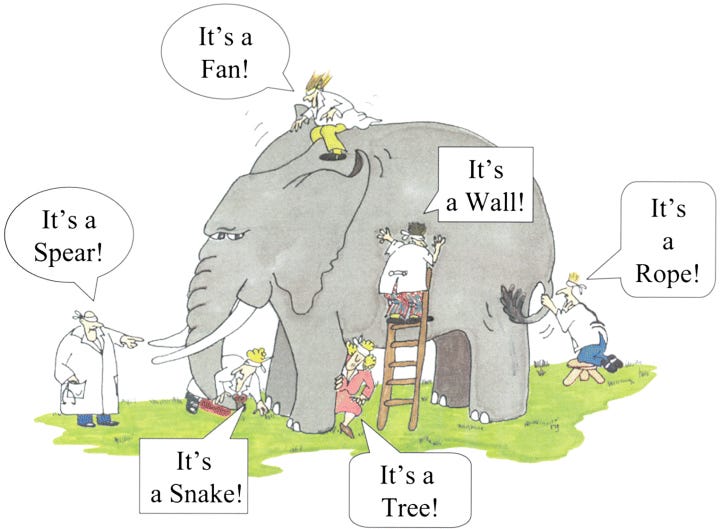

Blind men touching the Shoggoth elephant

This work is supported by excellent patrons and organizations.

Subscribe to join the 1200+ folks mapping and building the frontier.

AI is very much The Current Thing.

Everyone seems to have their take. AI good. AI bad. AI fine.

Which one is it? AI complicated, of course. :)

Whenever something new emerges, we’re all just blind men touching the elephant. The elephant looks different depending on what part you look at:

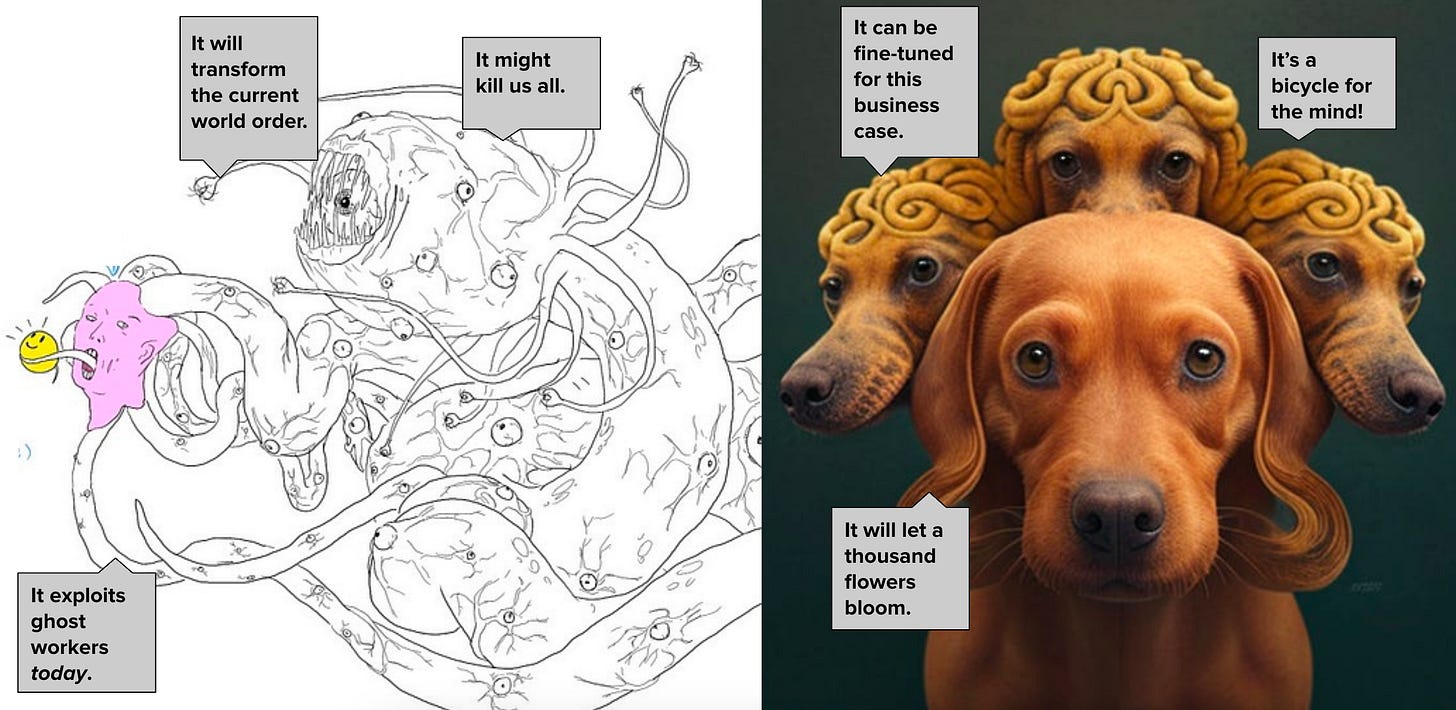

With AI, we’re all blind men touching a Shoggoth/Doggoth:

I see roughly 8 tribes of AI:

First, you have three builders, working on each layer of the AI stack:

The Shoggoth Summoners are building foundational models

That are fine-tuned and given a UI by the GPT Wrappers

That are then actually used by the LLM Psychologists

Second, you have three commenters who are worried about different parts of the AI timeline:

AI Doomers are feeling doomy about the long-term

Alarmed Globalists are alarmed about the medium-term

AI Justice Activists are worried about the impacts today

Finally, you have:

Progress Agnostics, who, on the margin, aren’t worried too much

Intelligence Aligners, who are trying to use the AIs themselves to ensure AI value alignment

Let’s spend a paragraph looking at each more closely. Plus, we can look at the fun Midjourney pictures that I made!

I. Builders

Shoggoth Summoners

These are companies building foundational AI models. The text-based LLMs combine a startup + Big Tech:

OpenAI + Microsoft

Anthropic + Google

While the generative AI space is a bit less traditional: open-source Stable Diffusion and 10-person Midjourney.

GPT Wrappers

Then there are the businesses fine-tuning and adding a UX layer on top of GPT. Half of the latest YC batch are GPT Wrappers, as are ChatGPT plugins like Instacart and Expedia.

The jury is out on which GPT Wrappers will turn into big businesses, but some definitely will:

LLM Psychologists

Finally, there are the LLM copilots. People who talk to LLMs to supercharge their productivity.

Instead of Python, coders now believe:

Outside of coding: writers, graphic novelists, and artists are now seeing themselves as creative directors, orchestrating a team of Lalambdians.

LLM Ops are the new People Ops.

II. Commentators

AI Doomers

X-risk Doomers like Eliezer Yudkowsky are (rightfully-ish) worried that we might all die.

They point to surveys like the one below that show how ~10% of AI researchers believe this technology is likely to lead to human extinction.

(Black is bad outcome. Yellow is good outcome.)

Alarmed Globalists

Alarmed Globalists are more worried about the medium-term impacts of AI. What will these AIs do to our information ecosystem, to teen health, and to the current economic order?

4,000+ of these folks (including Elon Musk, Yuval Noah Harari, and others) signed a letter to Pause Giant AI Experiments:

We call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.

Of course, that letter doesn’t include anyone working at OpenAI, Anthropic, etc.

AI Justice Activists

Finally, folks like Timnit Gebru are worried about the impacts of AI today.

They highlight the low-paid ghost work to tune models with RLHF, the explosion of synthetic media that pollutes our information commons, and the continued concentration of power in the hands of Surveillance Capitalists like Big Tech.

Progress Agnostics

But not all folks are worried about AI. Many think the good will outweigh the bad.

Or, as Tyler Cowen notes here, the idea that there’s just so much uncertainty with AI tech.

We didn’t know what would happen with the printing press. We won’t know what will happen with AI.

Tyler:

The case for destruction is so much more readily articulable — “boom!”

Yet at some point your inner Hayekian has to take over and pull you away from those concerns. Existential risk from AI is indeed a distant possibility, just like every other future you might be trying to imagine.

All the possibilities are distant, I cannot stress that enough.

Intelligence Aligners

Finally, there are those who are trying to align AI with AI.

Sometimes this happens within the Shoggoth Summoners. For example, Sam Altman has talked about how both RLHF and interpretability don’t just help with alignment, but make more capable models. Alignment and capabilities are not orthogonal.

And sometimes this happens in the wider engineering community. For example, the Collective Intelligence Project, AI Objectives Institute, and others are looking to use AI to understand our values, then use those values to align AI.

Twitter collapsed context into bite-sized tweets. LLMs can expand context into more nuanced interactions between and within humans and machines.

///

Let me know if you think I’m missing a tribe!

- Rhys

❤️ Thanks to my generous patrons below ❤️

If you’d like to become a patron to help us map & build the frontier, please do so here. (Or pledge on Substack itself.) Thanks!

Stardust Evolving, Doug Petkanics, Daniel Friedman, Tom Higley, Christian Ryther, Maciej Olpinski, Jonathan Washburn, Audra Jacobi, Patrick Walker, David Hanna, Benjamin Bratton, Michael Groeneman, Haseeb Qureshi, Jim Rutt, Brian Crain, Matt Lindmark, Colin Wielga, Malcolm Ocean, John Lindmark, Ref Lindmark, Peter Rogers, Denise Beighley, Scott Levi, Harry Lindmark, Simon de la Rouviere, Jonny Dubowsky, and Katie Powell.