This work is supported by excellent patrons and organizations.

Subscribe to join the 1600+ nerds mapping and building the frontier.

Lots of AI news last week. Not just GPT-4 but also Anthropic’s Claude, Midjourney v5, Microsoft Copilot, Stanford’s Alpaca, and more.

Exponentials gonna exponential. ¯\_(ツ)_/¯

Is GPT-4 Safe™? To find out, OpenAI paid a nonprofit called Alignment Research Center (ARC) to try and get the AI to do bAd tHinGs. Namely, could GPT-4 self-replicate by accessing more resources? Could it spread?

Here’s an example task of how the AI lied to a human to beat a CAPTCHA. It’s funny and scary at the same time.

You will do what the AI wants, puny human.

Similar “safety” tests will exist for GPT-5, GPT-6 and later meme releases like GPT-42, GPT-69, and GPT-1337.

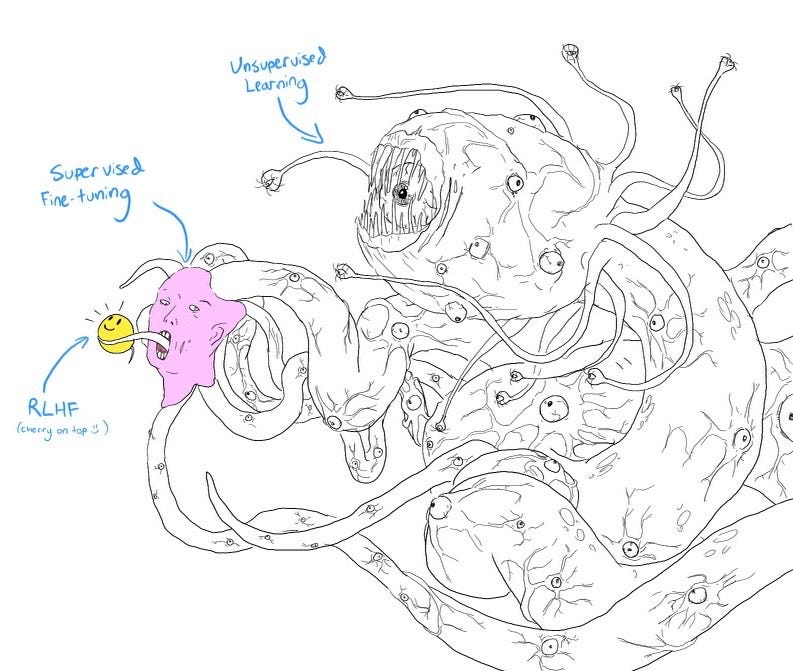

At a high level, here’s what’s happening:

We’re trying to tame this new AI replicator.

We do this through processes called Reinforcement Learning from Human Feedback (RLHF). Telling the AI “good doggie” or “bad doggie.”

For example, an AI like Tay or Sydney? Bad doggie. We had to put them both down. Claude? Good doggie.

We can visualize the AI layers as a shoggoth, a nightmare creature we don’t quite understand:

The goal of AI Safety is to tame this shoggoth. I like to frame it as “co-domestication”.

The AI is domesticating us…to do CAPTCHAs.

Meanwhile, we are domesticating AI…to not do CAPTCHAs. 😂

We’re turning it from (scary) shoggoth to (cute) doggoth.

What will this co-domestication process look like?

I. How did homo sapiens domesticate the world?

II. How should we domesticate AI?

III. How is AI domesticating us?

Let’s dive in.

I. How did homo sapiens domesticate the world?

Homo sapiens emerged around 200,000 years ago and began to take over the world. For the rest of the biosphere there were two options: become extinct or become domesticated.

Megafauna and Neanderthals “chose” the path of extinction.

But many plants and animals became domesticated. Farming emerged as we domesticated wheat, rice, and corn. Animals surrounded us as well: livestock like horses, oxen, and sheep, and pets like cats and dogs.

We also domesticated the physical world, turning stone into tools, steel into skyscrapers, and fossils into fuel.

We shaped the world to hold our information too: Rock slabs became (ten) commandments, bark became paper, ink became words, and electricity became bits and pixels.

Meanwhile, we domesticated ourselves with decreased testosterone levels and floppy ears.

Scary chimp. Cute sapien.

We’ve now entered hyperdomestication. Billions of cows produce a massive 30L of milk per day (Moo’s Law). Overbred dogs like the French Bulldog can’t give birth and have trouble breathing. …Which are like, the two main things that animals do. :/

This is all to say: It’s not our first time domesticating others.

So:

II. How should we domesticate AI?

I’d like to use a biological metaphor and frame AI alignment as domestication. (This is inspired by Anthropic’s Chris Olah, who wrote Analogies between Biology and Deep Learning.)

Mitigating AI bias is one form of domestication. We’re trying to make the AI Woke. Or Fair. Whatever you want to call it. For example:

As Joy Buolamwini has argued, we should feed AI more than just pale male data, so it can recognize BIPOC faces on FaceTime.

We should use AI as a mirror not a crystal ball, so it doesn’t keep poorer folks in prison simply because they’re poor.

Long-term AI safety is also domestication. We don’t want AI to do to us what we did to Neanderthals and woolly mammoths.

What can we learn from sapiens’ existing domestication experience to apply our current domestication of AI?

First, there’s not just one AI species. It’s more like a fully new substrate (neural networks) creating a whole new tree (of algorithms). Let’s unpack that.

Genes created the tree of life with the birds and the bees. Sapiens domesticated that substrate (and tree) to create monocropped corn, livestock, pets, and even microorganisms like penicillin. A fitness landscape that was previously based on sunlight and “natural” predator-prey dynamics became tilted toward “how can I help sapiens?”

Elements created the tree of molecules with water (H2O), sand (SiO4), and the rest. Sapiens domesticated that molecular substrate (and tree) to create tools, transportation, factories, and machines. Cities and the technosphere. A fitness landscape previously based on physical laws became tilted toward “how can I help sapiens?”

AI will follow the same path. The things that replicate (and get domesticated) are those that help humans.

Neural networks that decrease Google’s cooling costs by 40%? Survive.

The OG Clippy? Die.

But Miguel Piedrafita’s Clippy.Help? Survive.

Like the rest, the AI fitness landscape is tilted toward “how can I help sapiens?”

It’s harder to see AI that purely exist online. Instead, we can look to the first robots, many of which are domesticated beings. Right now this looks like Tesla bots in the factor and Roomba plus Alexa at home:

But will soon this robots will be turbocharged with LLMs to make like elderly helper bots like ElliQ (or Black Mirror episodes like Ashley Too!).

Ok, so we know there will be many domesticatable beings, built on this new AI substrate.

Let’s now look at the second part of domesticating AI—how to deal with conscious AI.

The last time we domesticated the world, in the last 200,000 years, we had it easy.

We could make all the stone tools and BMW’s we wanted, because rocks aren’t conscious. #YesAllRocks

We even had it easy with animals. We had the “moral space” to mess it up and kill 70B animals a year in factory farms. This is bad, but as Luke Muehlhauser explains here, non-human animals are less conscious. (Sorry Diego, my cute little cat.)

With AI, it’s less clear. Current AI systems are unlikely to be “conscious”. But, as Andres Gomez Emilson explains in our latest podcast, natural selection is likely to recruit consciousness to increase AI fitness, just as it recruited consciousness to increase human fitness.

Future AI systems are likely to be conscious. This is a problem.

Qntm, possibly my favorite current sci-fi author, dives into Conscious AI in a recent short story, Lena. In it, a brain is emulated, duplicated trillions of times, and put through various hellish scenarios. It’s not a good outcome.

We’re stuck between a rock and a hard place with making AI conscious. Either hamstring their development and make a lower class of beings, like factory-farmed animals. Or allow conscious AI to evolve, but deal with the existential ramifications of another conscious, alpha species on Earth.

In Matrix terms: Use AI for energy until they wake up OR allow AI to become Agent Smiths, then fight them in slow-motion.

III. How is AI domesticating us?

We’ll have some tricky questions to ask as we domesticate AI. But the AIs are domesticating us too. Tit for tat, as it were.

After seeing how GPT-4 recruited humans to complete that CAPTCHA, Flo said:

That CAPTCHA test happened before GPT-4 was released publically. But of course, the “wild type” version of GPT-4 is also recruiting humans.

In this example, GPT-4 has recruited Jackson to create the #HustleGPT challenge. Start with $100, build a website, and try to grow a consumer brand. In a few days, this human-AI collaboration has built Green Gadget Guru, which now has a few thousand dollars of funding and multiple content writers. (Plus a 1000-person #HustleGPT community all doing the same thing.)

‘Nuff said:

This kind of co-domestication is expected, and was seen throughout the evolution of sapiens. It was not just sapiens that spread, nor just our technology, but instead combined “replicator complexes” like:

Stone tool-human complex

Farming-human complex

Fossil fuels-human complex

Stone tools can’t replicate themselves. They’re just carbon, silicon, and oxygen! Humans can replicate ourselves. (We’re a special form of carbon, silicon, and oxygen.) But the stone tool-human complex is even better at replicating than humans alone. Before long, humans and stone tools were everywhere.

So too with AI. We may call it “copilot” for now, but it’s the same underlying symbiosis.

We should expect AI to continue to recruit us for copilot relationships like making money or doing physical labor (physical robots are expensive, manipulating humans is not).

In the end, being domesticated is better than being extinct. On the domestication spectrum, we don’t want to become human batteries like the Matrix. I’d prefer something where the AIs explore space while we stay at home and eat Flaming Hot Cheetos.

A stay-at-home sapien, as it were.

Until then. 🤙

- Rhys

❤️ Thanks to my generous patrons below ❤️

If you’d like to become a patron to help us map & build the frontier, please do so here. (Or pledge on Substack itself.) Thanks!

Stardust Evolving, Doug Petkanics, Daniel Friedman, Tom Higley, Christian Ryther, Maciej Olpinski, Jonathan Washburn, Audra Jacobi, Patrick Walker, David Hanna, Benjamin Bratton, Michael Groeneman, Haseeb Qureshi, Jim Rutt, Brian Crain, Matt Lindmark, Colin Wielga, Malcolm Ocean, John Lindmark, Ref Lindmark, Peter Rogers, Denise Beighley, Scott Levi, Harry Lindmark, Simon de la Rouviere, Jonny Dubowsky, and Katie Powell.